Unlocking the Future of AI Integration: Making Sense of MCP and Its Awesome Support in n8n

Hey there! In the whirlwind world of artificial intelligence (AI), we're all trying to figure out how to make these amazing large language models (LLMs) actually work for us, right? One of the biggest challenges is getting them to play nicely with other systems and tools. That's where the Model Context Protocol (MCP) comes in, and its recent integration into the super-handy automation platform n8n is a game-changer. At Syrvi.ai, we're all about making AI practical and accessible, so let's dive into what this all means for you and your AI-driven workflows.

.jpg)

This blog is all about demystifying MCP, breaking down its key parts, explaining why it's a big deal, and showing how n8n’s native support is shaping the future of AI automation. Think of it as your friendly guide to the next level of AI integration!

What is MCP? A New Way for AI to Talk to the World

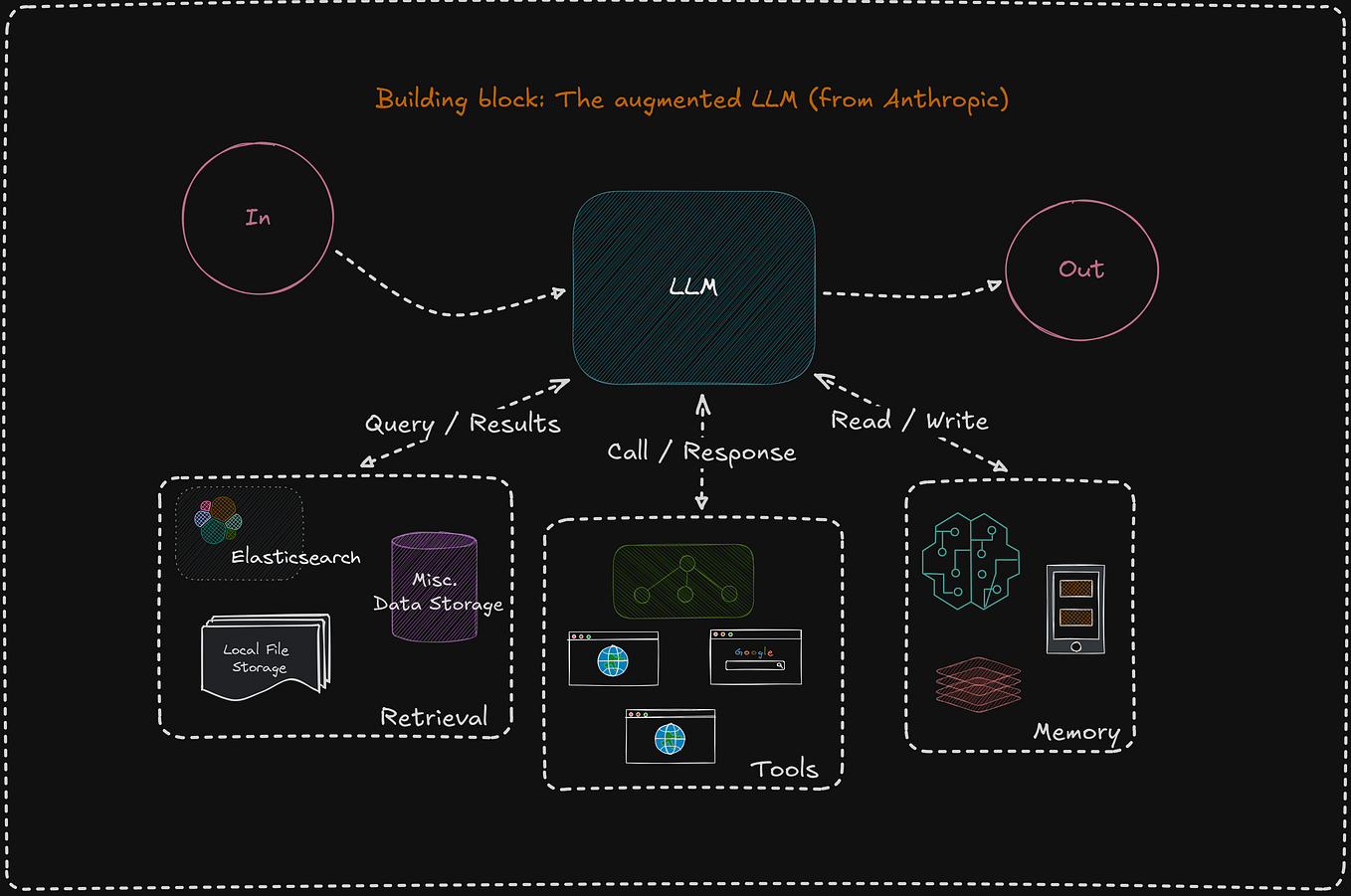

So, what exactly is MCP, or Model Context Protocol? Well, imagine you're trying to teach a super-smart AI to use a bunch of different tools, but each tool speaks a different language. That's where MCP steps in. It's a newly developed protocol designed to standardise how large language models communicate with external systems. Think of it as a universal translator for AI!

Created by Anthropic, the brilliant minds behind the Claude family of LLMs, MCP aims to unify and simplify the interactions between AI models and the tools or APIs they need to access.

Traditionally, LLMs have been stuck with the headache of dealing with all sorts of different APIs and integration methods. MCP fixes this by providing a single, executable API interface that AI models can use to access a wide range of external functions and data sources. This is super important as AI models become more and more a part of our daily business, needing to use tools reliably and efficiently.

Since it launched in late 2024, MCP has been gaining serious momentum. Big names like OpenAI have jumped on board, recognising its potential to streamline AI workflows across different platforms, including SaaS apps and desktop tools.

Want to get super technical? Check out Anthropic’s official documentation on MCP for all the nitty-gritty details: Anthropic MCP Documentation.

The Core Components of MCP: Host, Client, and Server – Think of It Like a Restaurant!

To really understand MCP, let's break it down into its three main parts. Think of it like a restaurant:

1. MCP Host (The Customer):

The MCP Host is like the customer – it's the LLM-powered application that needs something from the outside world. For example, an AI assistant or chatbot that needs to grab some data, do some calculations, or kick off a workflow. The host relies on MCP to get access to tools it doesn't have on its own.

2. MCP Client (The Waiter):

The MCP Client is like the waiter – it takes the customer's order and makes sure it gets to the kitchen. It manages the communication between the host and the MCP Server, making sure everything runs smoothly.

3. MCP Server (The Kitchen):

The MCP Server is like the kitchen – it's where all the action happens. It's a lightweight application that exposes different functions and actions that the host can use. Think of it as an API gateway, showing off all the tools and workflows that the host can call on.

This setup lets you scale things up easily. Hosts can connect to different MCP servers, each offering different skills, and servers can be updated without messing everything else up.

Why MCP? Because We Need a Better Way for AI to Play Together

Now, you might be thinking, "Do we really need another protocol? We already have REST APIs!" And that's a fair question. The introduction of MCP has definitely sparked some debate. But here's why MCP is different and why it's so exciting for AI-driven workflows:

It's a Universal Translator:

Unlike traditional APIs that are all different and confusing, MCP provides a consistent interface that's designed specifically for LLMs. This makes it way easier to connect different systems.

It Lets AI Take Action:

MCP isn't just about sharing data; it lets LLMs actually do things through the API. This means AI can trigger complex processes, not just fetch information.

It Gets Better with Friends:

The more tools and platforms that adopt MCP, the more valuable it becomes. Think of it like social media – the more people use it, the more useful it is. With big players like OpenAI and Anthropic on board, MCP is off to a great start.

It's Made for AI:

MCP is designed with the unique needs of AI in mind, like handling context, managing conversations, and supporting real-time communication.

Want a more balanced view? Check out this article from The New Stack: The New Stack on MCP.

n8n’s Native MCP Integration: Automation Just Got a Whole Lot Smarter

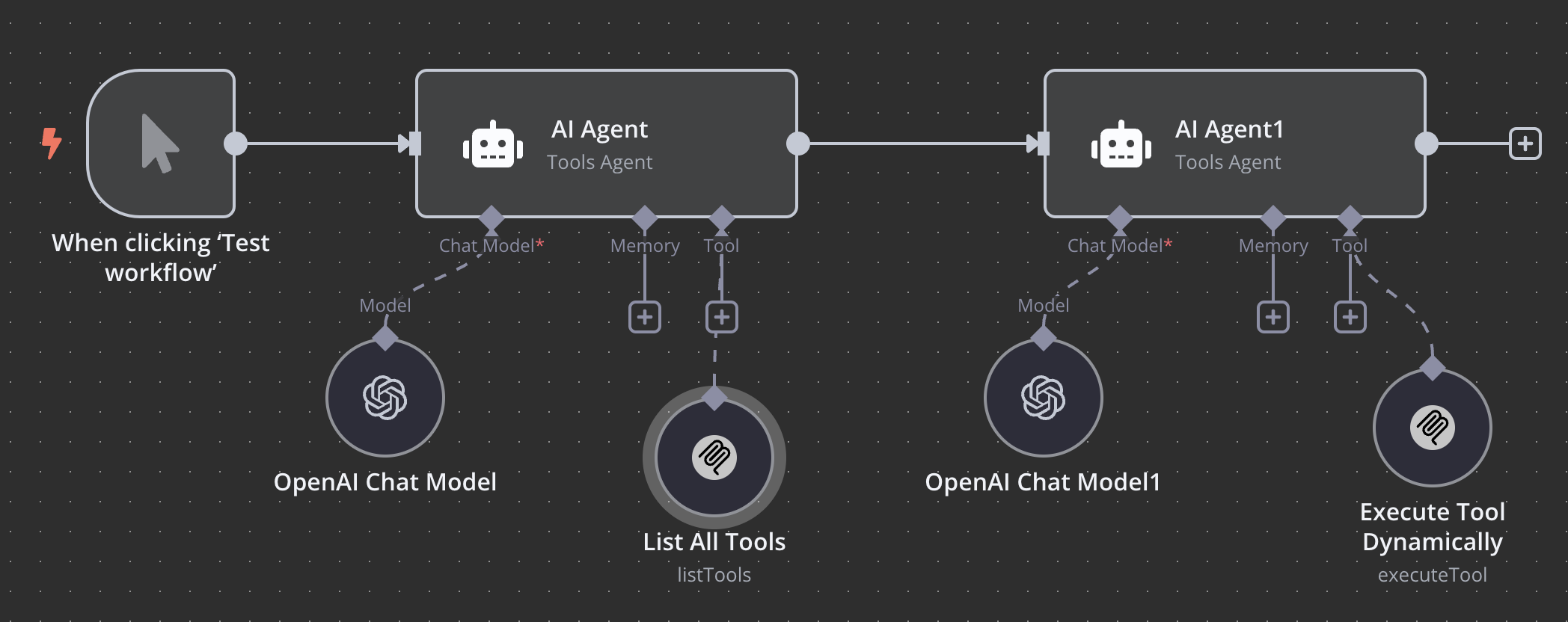

Okay, this is where things get really cool. n8n, that awesome open-source workflow automation tool, has added native support for MCP with a special MCP server trigger and MCP client node. This is a big deal because:

It Lets AI Control Your Workflows:

With the MCP server trigger, you can expose your n8n workflows and tools directly to MCP hosts. This means AI models can trigger complex workflows inside n8n as if they were built-in tools. Talk about supercharging your AI!

It's Like a Custom AI Toolkit:

n8n lets you add all sorts of tools to the MCP server, like calculators or connections to cloud services. This means you can create a custom AI toolkit that's perfect for your specific needs.

It's All About Real-Time:

The integration supports SSE, which means real-time, event-driven communication between MCP hosts and the n8n MCP server. This is key for AI that needs to react quickly.

It's a Community Effort:

The n8n team is always listening to users and making the MCP features even better. This means the integration will keep evolving to meet your needs.

This native support makes n8n a super powerful platform for building AI-augmented automation workflows, connecting LLMs with real-world business applications.

Want to learn more about n8n? Head over to their official website: n8n Official Website.

Setting Up MCP in n8n: It's Easier Than You Think!

Setting up MCP inside n8n might sound complicated, but it's actually pretty straightforward. Here's a quick rundown:

Add the MCP Server Trigger:

Start with a fresh workflow in n8n and add the MCP server trigger node. This is where the magic starts!

Add Tools to the Server:

Connect tools like a calculator node to the MCP server. This lets the LLM host hand off tasks, like calculations, to n8n.

Activate the Workflow:

Make sure your workflow is turned on so it can respond to MCP requests.

Configure the MCP Host:

Use an MCP host application, like the Claude Desktop app, to connect to the n8n MCP server. You'll need to enable developer mode in the host app and set up your credentials with the server’s SSE endpoint.

Test It Out:

From the host, try using the tools exposed by the MCP server and see if they work. For example, ask it to do a calculation and check the answer.

Troubleshooting:

You might need a "Super Gateway" to help with the connection if your host doesn't fully support MCP client protocols.

This setup creates a powerful partnership where AI hosts can tap into n8n’s awesome automation skills.

Need more detailed instructions? Check out n8n’s community forum and documentation: n8n Community.

Technical Tips and Best Practices for MCP: Making Sure Everything Runs Smoothly

Deploying MCP in the real world takes a bit of care to make sure everything works perfectly:

Enable Developer Mode:

Many MCP hosts, like Claude Desktop, need developer mode turned on to allow custom MCP client connections.

Use SSE:

MCP uses Server-Sent Events (SSE) for real-time communication. Make sure your network can handle SSE and that your firewalls are set up correctly.

Super Gateway for Compatibility:

If your MCP host doesn't support direct MCP client communication, a Super Gateway can help bridge the gap.

Keep Your Credentials Safe:

Securely manage your credentials and endpoints for MCP servers. Use environment variables or secure vaults to store sensitive info.

Watch Your Logs:

Keep an eye on your MCP server logs and workflow metrics to catch any issues early and make sure everything is running smoothly.

Join the Community:

Connect with the n8n and MCP communities to share your experiences, report bugs, and suggest new features.

By following these tips, you can get the most out of MCP while keeping things safe and reliable.

Conclusion: The Future of AI is Here, and It's Looking Bright!

The Model Context Protocol is a big step forward in helping large language models work better with the world around them. It solves a lot of the problems with traditional APIs and offers a unified, executable interface that's perfect for AI workflows.

n8n’s native integration of MCP opens up a whole new world of automation possibilities, letting AI hosts easily use complex workflows and tools. This partnership between AI and automation platforms like n8n is set to change how businesses use artificial intelligence.

At Syrvi.ai, we're super excited about these developments and how they can improve AI-driven solutions. We encourage you to explore MCP, play around with n8n’s integration, and help shape the future of AI automation.

Further Reading and Resources

Got questions? Want to know how Syrvi.ai can help you build AI-powered workflows with MCP and n8n? Get in touch with our experts today! We're here to help you make AI work for you.